RAG: Making AI Answer From Your Documents (Part 4/7)

This is Part 4 of a 7-part series on AI Fundamentals: RAG (Retrieval Augmented Generation), the technique that lets AI answer questions based on your documents.

Last time, we covered tool calling - that’s how AI agents orchestrate actions by asking you to execute functions.

This time, we’re tackling RAG (Retrieval Augmented Generation), the technique that lets AI answer questions based on your documents.

You’ll hear a lot about RAG in the industry:

“You need vector databases!”

“You need embedding models!”

“Complex chunking strategies required!”

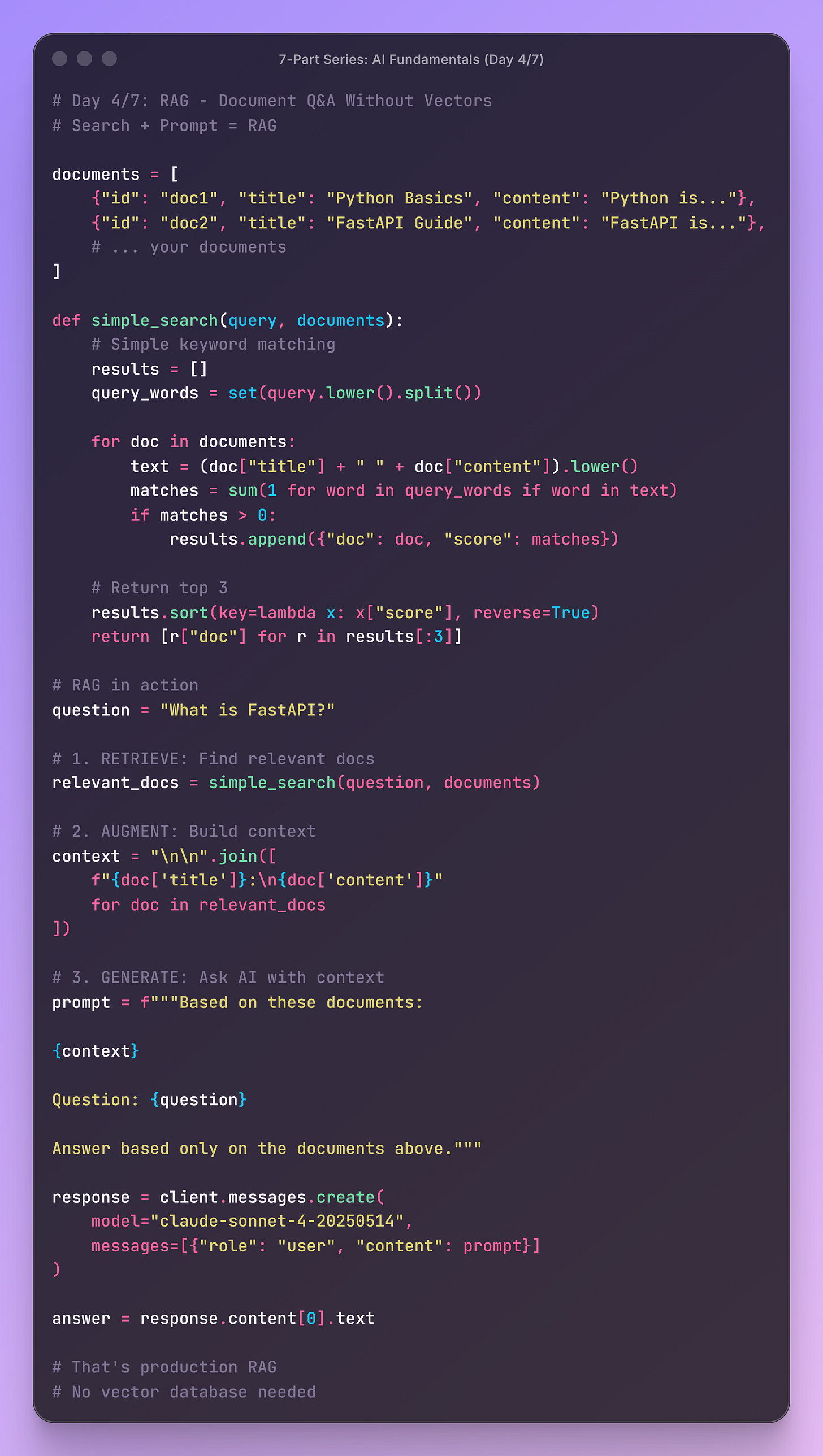

Here’s the truth: RAG is search + put in prompt + ask AI.

That’s it. Simple keyword search works for many use cases.

Start simple. Add complexity only when simple breaks.

What RAG Actually Is

Without RAG, AI can only answer based on its training data. It doesn’t know about:

Your company’s internal documents

Recent news or updates

Your product specifications

Customer data or tickets

Your knowledge base articles

RAG solves this by giving AI access to your documents. Here’s the three-step process:

1. RETRIEVE - Find relevant documents

2. AUGMENT - Put them in the prompt as context

3. GENERATE - Ask AI to answer based on those documents

That’s literally all RAG is. Let me show you.

Your Document Collection

First, you need documents. In this example, we’ll use a simple Python list:

# This is a SIMPLE PYTHON LIST!

DOCUMENTS = [

{

“id”: “doc1”,

“title”: “Python Basics”,

“content”: “”“

Python is a high-level programming language known for its

simplicity and readability. It was created by Guido van Rossum

and first released in 1991. Python supports multiple programming

paradigms including procedural, object-oriented, and functional

programming. Common use cases include web development, data

science, automation, and artificial intelligence.

“”“

},

{

“id”: “doc2”,

“title”: “FastAPI Framework”,

“content”: “”“

FastAPI is a modern, fast web framework for building APIs with

Python. It was created by Sebastián Ramírez and first released

in 2018. FastAPI is built on top of Starlette and Pydantic,

providing automatic API documentation, data validation, and

high performance. It’s one of the fastest Python frameworks

available, comparable to NodeJS and Go.

“”“

},

{

“id”: “doc3”,

“title”: “Machine Learning Basics”,

“content”: “”“

Machine learning is a subset of artificial intelligence that

enables systems to learn and improve from experience without

being explicitly programmed. There are three main types:

supervised learning, unsupervised learning, and reinforcement

learning. Popular frameworks include TensorFlow, PyTorch, and

scikit-learn.

“”“

}

]In production, you’d load these from:

Files (PDF, TXT, DOCX)

Databases (PostgreSQL, MongoDB)

APIs (Notion, Google Docs, Confluence)

Content management systems

But the concept stays the same: a collection of documents with IDs, titles, and content.

Step 1: Simple Keyword Search

The “retrieval” part of RAG is just search. Here’s a simple keyword search function. Note: this is pure Python. Nothing else.

def simple_keyword_search(query: str, documents: list, max_results: int = 3) -> list:

“”“

Simple keyword-based search.

For many use cases, this works surprisingly well!

“”“

query_lower = query.lower()

query_words = set(query_lower.split())

results = []

for doc in documents:

# Combine title and content for searching

searchable_text = (doc[”title”] + “ “ + doc[”content”]).lower()

# Count how many query words appear in the document

matches = sum(1 for word in query_words if word in searchable_text)

if matches > 0:

results.append({

“doc”: doc,

“score”: matches # Simple relevance score

})

# Sort by relevance (most matches first)

results.sort(key=lambda x: x[”score”], reverse=True)

# Return top results

return [r[”doc”] for r in results[:max_results]]What this does:

Splits the query into words

Checks each document for those words

Counts matches as a relevance score

Returns the top N most relevant documents

Example:

results = simple_keyword_search(”What is FastAPI?”, DOCUMENTS, max_results=3)

# Returns:

# [

# {”id”: “doc2”, “title”: “FastAPI Framework”, “content”: “...”},

# {”id”: “doc1”, “title”: “Python Basics”, “content”: “...”}

# ]The FastAPI document ranks first (contains “FastAPI”), Python Basics ranks second (contains “Python” and “API”).

Is this sophisticated? No. Does it work? Absolutely. More importantly, it’ll show you the CONCEPTS that are behind creating an effective RAG system.

Step 2 & 3: Augment and Generate

Now we combine retrieval with AI. Note: I’ll be using the Anthropic API to demonstrate, but the same principles apply to OpenAI (keep reading for code).

Here’s the complete RAG function:

def rag_query(question: str, documents: list, max_context_docs: int = 3) -> str:

“”“

RAG in 3 steps:

1. RETRIEVE: Find relevant documents

2. AUGMENT: Put them in the prompt

3. GENERATE: Ask Claude to answer based on the documents

“”“

# STEP 1: RETRIEVE relevant documents

relevant_docs = simple_keyword_search(question, documents, max_context_docs)

if not relevant_docs:

return “I couldn’t find any relevant information to answer your question.”

# STEP 2: AUGMENT - Build context from retrieved documents

context = “”

for i, doc in enumerate(relevant_docs, 1):

context += f”Document {i} - {doc[’title’]}:\n”

context += doc[”content”].strip()

context += “\n\n”

# STEP 3: GENERATE - Ask Claude

prompt = f”“”Based on the following documents, please answer the question.

Documents:

{context}

Question: {question}

Instructions:

- Answer based ONLY on the information in the documents above

- If the documents don’t contain enough information, say so

- Be specific and cite which document you’re referencing

- Keep your answer concise and clear”“”

response = client.messages.create(

model=”claude-sonnet-4-20250514”,

max_tokens=1024,

messages=[{”role”: “user”, “content”: prompt}]

)

return response.content[0].textLet’s see it in action:

question = “What is FastAPI and who created it?”

answer = rag_query(question, DOCUMENTS)

print(answer)Output:

According to Document 1 (FastAPI Framework), FastAPI is a modern,

fast web framework for building APIs with Python. It was created by

Sebastián Ramírez and first released in 2018. FastAPI is built on

top of Starlette and Pydantic, providing automatic API documentation,

data validation, and high performance. It’s noted as one of the

fastest Python frameworks available, comparable to NodeJS and Go.What just happened:

Retrieved the “FastAPI Framework” document (highest keyword match)

Augmented the prompt with that document’s content

Generated an answer based on the provided context

Claude answered the question using ONLY the information in the document. It didn’t use its training data - it used your documents.

Understanding Each Step

Let’s break down what makes this work:

The Retrieval Step

relevant_docs = simple_keyword_search(question, documents, max_context_docs)This finds documents likely to contain the answer. The better your search, the better your answers.

Search quality matters:

Good search = relevant documents = accurate answers

Poor search = irrelevant documents = “I don’t have that information”

Simple keyword search works when:

Documents use similar terminology to queries

You have relatively few documents (under 10,000).

Exact word matches are important

📦 Can simple keyword search handle 10,000 documents?

Yes - and much more. Simple keyword search can handle 50,000+ documents with basic optimization (indexing, caching).

The real limitation isn’t document count. It’s search quality. As your corpus grows, keyword matching produces more false positives. That’s when semantic search (embeddings) becomes valuable.

For most internal knowledge bases and documentation sites, keyword search scales further than you think.

The Augmentation Step

context = “”

for i, doc in enumerate(relevant_docs, 1):

context += f”Document {i} - {doc[’title’]}:\n”

context += doc[”content”].strip()

context += “\n\n”This builds a string containing all relevant documents. We’re literally just concatenating text.

Why this works: AI context windows are large (Claude Sonnet 4 has 200K tokens). You can fit dozens of documents in a single prompt.

The Generation Step

prompt = f”“”Based on the following documents, please answer the question.

Documents:

{context}

Question: {question}

Instructions:

- Answer based ONLY on the information in the documents above

- If the documents don’t contain enough information, say so

- Be specific and cite which document you’re referencing”“”

response = client.messages.create(

model=”claude-sonnet-4-20250514”,

max_tokens=1024,

messages=[{”role”: “user”, “content”: prompt}]

)The prompt structure is critical. Notice:

We provide documents first (so Claude sees them before the question)

We give explicit instructions to only use provided information

We ask for citations (which document the answer came from)

This prevents AI from “hallucinating” answers using its training data when your documents don’t have the information.

Production Enhancement: Citations

In production, users need to know where information came from. Here’s an enhanced version that returns sources:

def rag_query_with_citations(

question: str,

documents: list,

max_context_docs: int = 3

) -> dict:

“”“

RAG with source citations.

Returns both the answer and which documents were used.

“”“

# Retrieve documents

relevant_docs = simple_keyword_search(question, documents, max_context_docs)

if not relevant_docs:

return {

“answer”: “I couldn’t find any relevant information to answer your question.”,

“sources”: []

}

# Build context with document IDs for citation

context = “”

for i, doc in enumerate(relevant_docs, 1):

context += f”[Document {i} - {doc[’title’]} (ID: {doc[’id’]})]:\n”

context += doc[”content”].strip()

context += “\n\n”

# Ask Claude to cite sources

prompt = f”“”Based on the following documents, please answer the question.

Documents:

{context}

Question: {question}

Instructions:

- Answer based ONLY on the information in the documents above

- When stating facts, mention which document number you’re referencing

(e.g., “According to Document 1...”)

- If the documents don’t contain enough information, say so clearly

- Be specific and accurate”“”

response = client.messages.create(

model=”claude-sonnet-4-20250514”,

max_tokens=1024,

messages=[{”role”: “user”, “content”: prompt}]

)

# Return answer with sources

return {

“answer”: response.content[0].text,

“sources”: [

{

“id”: doc[”id”],

“title”: doc[”title”],

“content”: doc[”content”]

}

for doc in relevant_docs

]

}Usage:

result = rag_query_with_citations(

“Who created Python?”,

DOCUMENTS

)

print(result[”answer”])

# Output: “According to Document 1 (Python Basics), Python was

# created by Guido van Rossum and first released in 1991.”

print(”\nSources used:”)

for source in result[”sources”]:

print(f”- {source[’title’]} (ID: {source[’id’]})”)

# Output:

# Sources used:

# - Python Basics (ID: doc1)This gives users transparency. They can verify the answer by checking the source documents.

Complete Working Example

Here’s everything together:

###############################

# ANTHROPIC API RAG

###############################

import os

from anthropic import Anthropic

from dotenv import load_dotenv

load_dotenv()

client = Anthropic(api_key=os.getenv(”ANTHROPIC_API_KEY”))

# Your documents

DOCUMENTS = [

{

“id”: “doc1”,

“title”: “Python Basics”,

“content”: “”“

Python is a high-level programming language known for its

simplicity and readability. It was created by Guido van Rossum

and first released in 1991. Python supports multiple programming

paradigms including procedural, object-oriented, and functional

programming. Common use cases include web development, data

science, automation, and artificial intelligence.

“”“,

},

{

“id”: “doc2”,

“title”: “FastAPI Framework”,

“content”: “”“

FastAPI is a modern, fast web framework for building APIs with

Python. It was created by Sebastián Ramírez and first released

in 2018. FastAPI is built on top of Starlette and Pydantic,

providing automatic API documentation, data validation, and

high performance. It’s one of the fastest Python frameworks

available, comparable to NodeJS and Go.

“”“,

},

{

“id”: “doc3”,

“title”: “Machine Learning Basics”,

“content”: “”“

Machine learning is a subset of artificial intelligence that

enables systems to learn and improve from experience without

being explicitly programmed. There are three main types:

supervised learning, unsupervised learning, and reinforcement

learning. Popular frameworks include TensorFlow, PyTorch, and

scikit-learn.

“”“,

},

]

# Simple keyword search

def simple_keyword_search(query: str, documents: list, max_results: int = 3):

query_lower = query.lower()

query_words = set(query_lower.split())

results = []

for doc in documents:

searchable_text = (doc[”title”] + “ “ + doc[”content”]).lower()

matches = sum(1 for word in query_words if word in searchable_text)

if matches > 0:

results.append({”doc”: doc, “score”: matches})

results.sort(key=lambda x: x[”score”], reverse=True)

return [r[”doc”] for r in results[:max_results]]

# RAG query function

def rag_query(question: str, documents: list, max_context_docs: int = 3):

# 1. RETRIEVE

relevant_docs = simple_keyword_search(question, documents, max_context_docs)

if not relevant_docs:

return “I couldn’t find any relevant information.”

# 2. AUGMENT

context = “”

for i, doc in enumerate(relevant_docs, 1):

context += f”Document {i} - {doc[’title’]}:\n”

context += doc[”content”].strip() + “\n\n”

# 3. GENERATE

prompt = f”“”Based on the following documents, answer the question.

Documents:

{context}

Question: {question}

Answer based ONLY on the documents above.”“”

response = client.messages.create(

model=”claude-sonnet-4-20250514”,

max_tokens=1024,

messages=[{”role”: “user”, “content”: prompt}],

)

return response.content[0].text

# Use it

answer = rag_query(”What is FastAPI?”, DOCUMENTS)

print(answer)This is the pattern for production RAG. No vector database. No embeddings. Just search, context, and AI.

When to Upgrade Beyond Keyword Search

Simple keyword search works until it doesn’t. You’ll know it’s time to upgrade when:

Symptom: Users ask “What are Python frameworks?” but documents say “FastAPI is a web framework for Python” (no exact word match for “frameworks”)

Solution: Add stemming or lemmatization to match word variations

Symptom: Users ask “How do I build APIs?” but documents only mention “REST endpoints” and “web services” (semantic mismatch)

Solution: Consider semantic search with embeddings

Symptom: You have 50,000+ documents and keyword search is too slow

Solution: Add indexing or use a search engine (Elasticsearch)

Symptom: Documents are in multiple languages

Solution: Use multilingual embeddings

But for many applications, like internal knowledge bases, documentation sites, customer support with a few hundred articles, keyword search is enough.

What About OpenAI?

The pattern is identical with OpenAI. The only difference is the API call in step 3:

Search Function (Identical)

def simple_keyword_search(query, documents, max_results=3):

# Exact same code as Anthropic version

# Search logic doesn’t depend on which LLM you use

passRAG Function (Almost Identical)

def rag_query(question: str, documents: list, max_context_docs: int = 3):

# Steps 1 & 2: Identical to Anthropic

relevant_docs = simple_keyword_search(question, documents, max_context_docs)

context = “”

for i, doc in enumerate(relevant_docs, 1):

context += f”Document {i} - {doc[’title’]}:\n{doc[’content’]}\n\n”

prompt = f”“”Based on the following documents, answer the question.

Documents:

{context}

Question: {question}

Answer based ONLY on the documents above.”“”

# Step 3: Different API call

response = client.chat.completions.create(

model=”gpt-4o”,

max_tokens=1024,

messages=[{”role”: “user”, “content”: prompt}]

)

# Different response structure

return response.choices[0].message.contentKey differences:

API call:

client.chat.completions.create()vsclient.messages.create()Response structure:

response.choices[0].message.contentvsresponse.content[0].text

Everything else, the search logic, context building, prompt structure, is identical.

Here’s the full working example using OpenAI:

###############################

# OPENAI API RAG

###############################

import os

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI(api_key=os.getenv(”OPENAI_API_KEY”))

# Your documents

DOCUMENTS = [

{

“id”: “doc1”,

“title”: “Python Basics”,

“content”: “”“

Python is a high-level programming language known for its

simplicity and readability. It was created by Guido van Rossum

and first released in 1991. Python supports multiple programming

paradigms including procedural, object-oriented, and functional

programming. Common use cases include web development, data

science, automation, and artificial intelligence.

“”“,

},

{

“id”: “doc2”,

“title”: “FastAPI Framework”,

“content”: “”“

FastAPI is a modern, fast web framework for building APIs with

Python. It was created by Sebastián Ramírez and first released

in 2018. FastAPI is built on top of Starlette and Pydantic,

providing automatic API documentation, data validation, and

high performance. It’s one of the fastest Python frameworks

available, comparable to NodeJS and Go.

“”“,

},

{

“id”: “doc3”,

“title”: “Machine Learning Basics”,

“content”: “”“

Machine learning is a subset of artificial intelligence that

enables systems to learn and improve from experience without

being explicitly programmed. There are three main types:

supervised learning, unsupervised learning, and reinforcement

learning. Popular frameworks include TensorFlow, PyTorch, and

scikit-learn.

“”“,

},

]

# Simple keyword search (identical to Anthropic version)

def simple_keyword_search(query: str, documents: list, max_results: int = 3):

query_lower = query.lower()

query_words = set(query_lower.split())

results = []

for doc in documents:

searchable_text = (doc[”title”] + “ “ + doc[”content”]).lower()

matches = sum(1 for word in query_words if word in searchable_text)

if matches > 0:

results.append({”doc”: doc, “score”: matches})

results.sort(key=lambda x: x[”score”], reverse=True)

return [r[”doc”] for r in results[:max_results]]

# RAG query function (OpenAI version)

def rag_query(question: str, documents: list, max_context_docs: int = 3):

# 1. RETRIEVE

relevant_docs = simple_keyword_search(question, documents, max_context_docs)

if not relevant_docs:

return “I couldn’t find any relevant information.”

# 2. AUGMENT

context = “”

for i, doc in enumerate(relevant_docs, 1):

context += f”Document {i} - {doc[’title’]}:\n”

context += doc[”content”].strip() + “\n\n”

# 3. GENERATE

prompt = f”“”Based on the following documents, answer the question.

Documents:

{context}

Question: {question}

Answer based ONLY on the documents above.”“”

response = client.chat.completions.create(

model=”gpt-4o”,

max_tokens=1024,

messages=[{”role”: “user”, “content”: prompt}],

)

return response.choices[0].message.content

# Use it

answer = rag_query(”What is FastAPI?”, DOCUMENTS)

print(answer)Why Understanding This Matters

Now you know what RAG actually is. When someone mentions their “RAG system,” you understand what’s happening:

They’re searching documents (maybe with keywords, maybe with vectors)

They’re putting those documents in a prompt

They’re asking an LLM to answer based on that context

Whether they use additional tools to manage this at scale or build it themselves, the fundamental pattern is the same.

This knowledge lets you:

Evaluate tools effectively - Understand what they’re actually doing

Debug problems - Know where to look when answers are wrong (retrieval? prompt? generation?)

Optimize costs - See where tokens are being used

Make architectural decisions - Know when you need more sophistication and when simple works

Try It Yourself

Run the complete example above and experiment:

Add your own documents - Create a knowledge base for your domain

Test different queries - See what gets retrieved

Adjust max_results - Try retrieving 1, 3, or 5 documents

Modify the prompt - Change instructions and see how it affects answers

Compare providers - Run the same query through Claude and GPT

What’s Coming Next

You now understand basic RAG - how to make AI answer questions based on your documents.

Next up: Conversational RAG. We’re adding conversation memory to RAG so users can ask follow-up questions.

“What is FastAPI?” → “Who created it?” → “When was it released?”

You’ll see how combining concepts from previous articles (conversation history + RAG) creates a powerful document chatbot.

The pattern stays simple.

This is Part 4 of a 7-part series on AI Fundamentals. All code examples are available in the GitHub repository. Next up: Conversational RAG for follow-up questions.

Thanks for writing this, it clarifies a lot. I really appreciate the emphasis on starting simple with RAG. Keyword search indeed goes a long way. However, for more complex semantic understanding or very large document sets, vector embeddings become crucial for relevance and performance.